Infero¶

Warning

This software is still under development and not yet ready for operational use.

Description¶

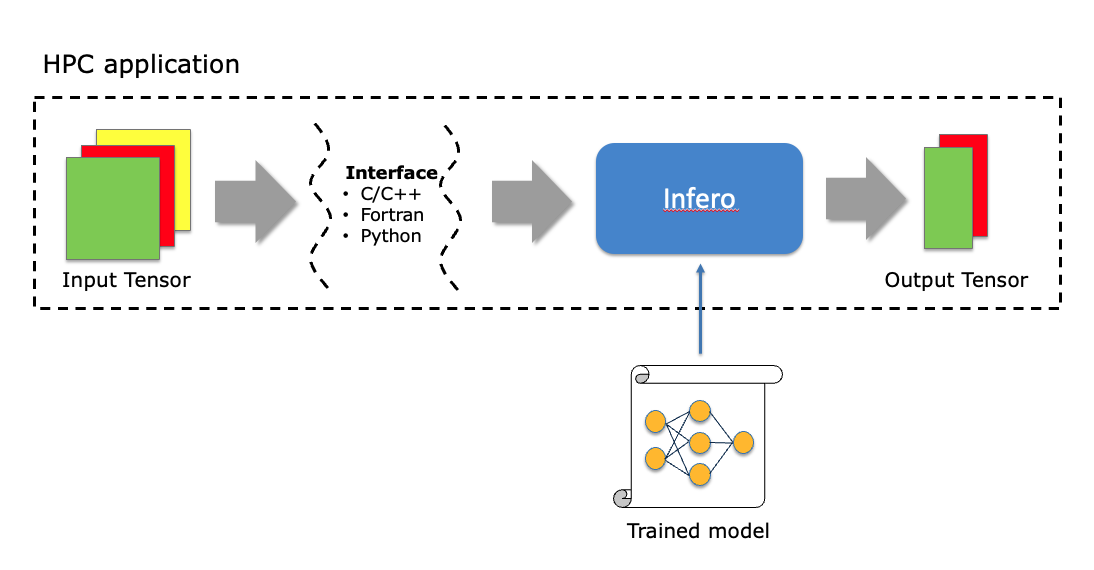

Infero is a machine learning support library that runs a pre-trained machine learning model for inference. Infero provides a common interface to multiple inference engines and can be called from multiple languages (C/C++, Fortran, Python).

Infero requires a relatively small dependency stack and is therefore also suitable for tightly-managed HPC environments.

User Guide:

Architecture¶

Infero is designed as a thin layer on top of interchangeable inference engines (backends) and provides a common API usable by multiple programming languages. It performs three main steps:

Transfers input data from the caller application to the inference engine

Runs the inference engine

Transfers output data back to the caller application

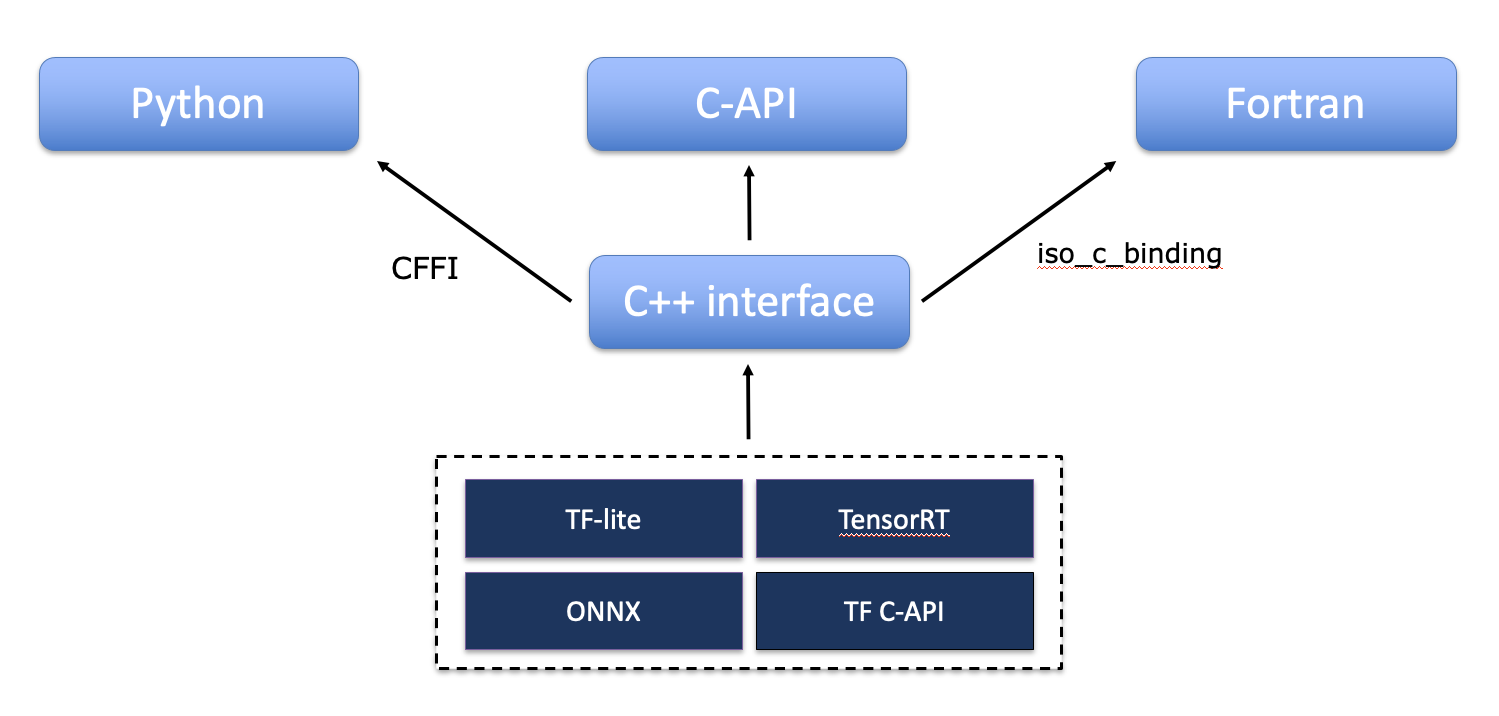

Infero accepts commonly used input/output data formats for each language of the API. For example, a C++ application will transfer data to/from Infero as raw memory buffers, a Python application will use numpy objects and a Fortran application Fortran arrays.

Infero is primarily developed in C++ and the additional APIs for C, Fortran and Python are built on top of the C++ code. A schematic diagram of the API architecture is shown here below:

Features¶

- API available for multiple languages:

C, C++, Fortran, Python

- Inference Engines supported:

Support for Multiple-input Multiple-output models

Automatic handling of C-style and Fortran-style tensors

License¶

Infero is available under the open source Apache License Version 2. In applying this licence, ECMWF does not waive the privileges and immunities granted to it by virtue of its status as an intergovernmental organisation nor does it submit to any jurisdiction.

- Authors

Antonino Bonanni, James Hawkes, Tiago Quintino

- Version

0.1.0